Table of Contents

Introduction

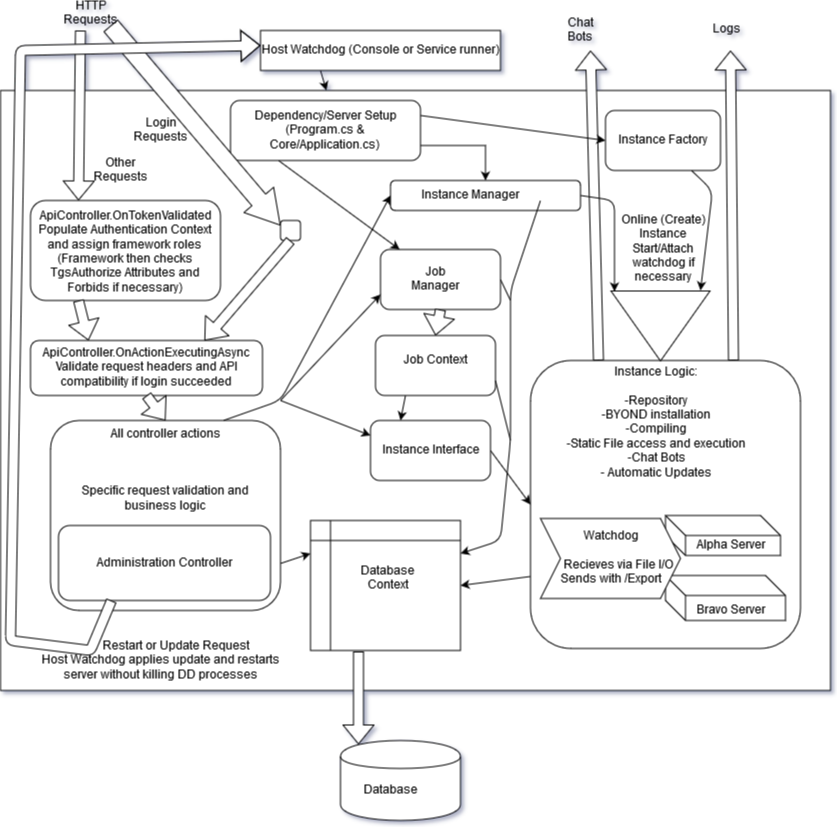

This is meant to be a brief overview of the TGS architecture to give new coders direction on where to code and the curious some insight to their questions. Given that this document is seperate from the authorative code it may fall out of date. For clairity, please contact project maintainers.

Host Watchdog

The host watchdog process monitors the actual server application. It exists mainly to facilitate the live update system built into it and also serves as a restart mechanism if requested. This component is generally not present in active development scenarios due to debugging overhead.

This consists of two parts a runner and the watchdog library. The library is the Tgstation.Server.Host.Watchdog project and the runners are the Tgstation.Server.Host.Console .NET Core project and the Tgstation.Server.Host.Service .NET Framework Windows service project

Main Server

This is a second process spawned by the Host Watchdog which facilitates the vast majority of the code. This is the Tgstation.Server.Host project which is fundamentally an ASP.NET Core MVC web application.

Server Initialization

The server's entrypoint is in the Tgstation.Server.Host.Program class. This class mainly determines if the Host watchdog is present and creates and runs the Tgstation.Server.Host.Server class. That class then builds an ASP.NET Core web host using the Tgstation.Server.Host.Core.Application class.

The Tgstation.Server.Host.Core.Application class has two methods called by the framework. First the Tgstation.Server.Host.Core.Application.ConfigureServices method sets up dependency injection of interfaces for Controllers, the Tgstation.Server.Host.Database.DatabaseContext, and the component factories of the server. The framework handles constructing these things once the application starts. Configuration is loaded from the appropriate appsettings.yml into the Tgstation.Server.Host.Configuration classes for injection as well. Then Tgstation.Server.Host.Core.Application.Configure method is run which sets up the web request pipeline which currently has the following stack of handlers:

- Catch any exceptions and respond with 500 and detailed HTML error page

- Respond with 503 if the application is still starting or shutting down

- Authenticate the JWT in Authentication header if present and run Tgstation.Server.Host.Controllers.ApiController on success

- Catch database exceptions and convert to 409 responses with the exception's Tgstation.Server.Api.Models.ErrorMessage

- Check Tgstation.Server.Host.Controllers for correct controller and run the action and use it's response.

- If not properly authenticated beforehand and action has a Tgstation.Server.Host.Controllers.TgsAuthorizeAttribute return 401

- If not properly authorized beforehand according to the parameters of the action's Tgstation.Server.Host.Controllers.TgsAuthorizeAttribute (if present) return 403

- If requested action does not exist return 404

Instance Manager Initialization

Once the web host starts, the Tgstation.Server.Host.Components.InstanceManager.StartAsync function is called (due to being registered as a IHostedService in Tgstation.Server.Host.Core.Application) this is the only StartAsync implementation that should be called by the framework, others should be called from this to maintain a cohesive initialization order.

The first thing this function does is call Tgstation.Server.Host.Database.DatabaseContext.Initialize which ensures the database is migrated, seeded, and ready to go. Then the Tgstation.Server.Host.Core.JobManager is started, which cleans up any jobs that are considered "still running" in the database. Finally all instances configured to be online are created in parallel (See Instances for onlining process) and the Tgstation.Server.Host.Core.Application is signalled to stop blocking requests with 503 responses before they are processed.

Database and Context

The database is exposed as a series of DbSet<T> objects through Tgstation.Server.Host.Database.IDatabaseContext . Queries are performed via async LINQ expressions. Inserts, updates, and deletes are done via modifiying the DbSet<T>s and then calling Tgstation.Server.Host.Database.IDatabaseContext.Save . Do some reading on Entity Framework Core for a deeper understanding.

Controllers

The webserver operates in an MVC style. All requests are routed through the Tgstation.Server.Host.Controllers . If a route doesn't exist as an action in a controller, a 404 response will be returned. Controllers interact with components via injecting the Tgstation.Server.Host.Components.IInstanceManager interface, access the database with the Tgstation.Server.Host.Controllers.ApiController.DatabaseContext property, and start jobs by injecting the Tgstation.Server.Host.Core.IJobManager interface.

Security

The authentication process begins in Tgstation.Server.Host.Controllers.HomeController.CreateToken . This is where users log in. They must supply their username and password via correct Tgstation.Server.Api.ApiHeaders . The server first attempts to use these credentials to login to the system. If that succeeds it checks if the system user's UID is registered in the database. Failing either of the previous two, it tries to match the username and password to an entry in the database. If either of these methods succeeds the user is considered authenticated and a token is generated and sent back to the user. If the user is a system user, the context of their login is kept for the amount of time until their token expires + 1 minute.

The password hashing used for database users is the standard provided by ASP.Net Core. It utilizes PBKDF2 with HMAC-SHA256, 128-bit salt, 256-bit subkey, with 10000 iterations. Read about it here: https://andrewlock.net/exploring-the-asp-net-core-identity-passwordhasher/

When this token is supplied in the Authorization header of a subsequent request, it is first cryptographically validated that it was sent by the current server. The token contain's the user's ID, and, using it, the user's info is retrieved from the database and put into an Tgstation.Server.Host.Security.IAuthenticationContext

Nearly all exposed controller actions are decorated with a Tgstation.Server.Host.Controllers.TgsAuthorizeAttribute . This attribute does 2 things. 1. It ensures the Tgstation.Server.Host.Security.IAuthenticationContext is valid for the request before running the action. 2. If it contains a permission flag specification, it will 403 the request if the user doesn't have one of the listed permissions.

Jobs

Long running operations create Tgstation.Server.Host.Models.Job objects which represent information about long running tasks. These objects can be queried to find out who started them, if they've been completed, canceled, who cancelled them, their error message if any, and get their progress percentage in some cases. The job will be created and supplied by the request that started it, but active/all jobs may also be queried.

Instances

Instances exist in two forms: Their database metadata and their actual class. The class only exists if the instance is set to be Tgstation.Server.Api.Models.Instance.Online . This is where all the actual server management code lives. A single instance is made up of individual components that work with each other through their intefaces

Instance Factory

This is responsible for creating the components and weaving them into the final Tgstation.Server.Host.Components.Instance. This happens automatically at server startup if an instance is configured to be online

Repository Manager

The Tgstation.Server.Host.Components.Repository.IRepositoryManager is the gatekeeper for cloning and accessing a Tgstation.Server.Host.Components.Repository.IRepository . Only one instance of a repository can be in use at a time (due to the single-threaded nature of libgit2), so the repository manager contains a semaphore wait queue which hands out the repository to only one client at a time. All repository operations (aside from cloning and deleting) are performed by the actual repository object. This includes fetching, hard resets, checkouts, synchronizing, etc. Most state put into the Tgstation.Server.Api.Models.Repository object is read directly from libgit2, exceptions being credentials and boolean settings.

Byond

The BYOND installation setup is largely decoupled from the database. When a byond version is downloaded and installed by the Tgstation.Server.Host.Components.Byond.IByondManager it is extracted to a directory titled with it's version in the BYOND folder acompanied by a text document stating which version it is. Platform specific installation steps are handled by specific implementations of Tgstation.Server.Host.Components.Byond.IByondInstaller . When it comes time to use an executable, the manager provides a Tgstation.Server.Host.Components.Byond.IByondExecutableLock which contains absolute paths to the DreamMaker and DreamDaemon executables

Compiler and Deployment

The compilation process is a distinct series of steps:

- Retrieve necessary information from the database in Tgstation.Server.Host.Components.IInstance.CompileProcess

- Choose a uniquely named folder in the

Gamedirectory for deployment - Acquire a Tgstation.Server.Host.Components.Byond.IByondExecutableLock

- Build the initial Tgstation.Server.Host.Models.CompileJob object from available data (Byond version, Revision, directory, etc)

- Announce the deployment through the chat bot system

- Create and copy the repository to the

<target folder>/Adirectory - Run the PreCompile hook

- Auto detect or check if the configured .dme is present

- Copy and apply static code modifications to the environment

- Run DreamMaker on the .dme

- Start a DreamDaemon instance to validate the DMAPI

- Run the PostCompile hook

- Copy

<target folder>/Ato<target folder>/B - Symlink all

GameStaticFilesto both the A and B directories - Commit the Tgstation.Server.Host.Models.CompileJob to the database

If any of the above steps fail, the target directory is deleted and the deployment is considered a bust. If all went well, after the Tgstation.Server.Host.Models.Job completes the new CompileJob is loaded into the instance's Tgstation.Server.Host.Components.Deployment.IDmbFactory .

The DmbFactory is where the Watchdog gets the Tgstation.Server.Host.Components.Deployment.IDmbProvider instances to run. Each CompileJob loaded into it is given a lock count. The latest CompileJob holds 1 lock and every DreamDaemon instance running that CompileJob holds another. Loading a new CompileJob releases the initial lock, and when all other locks are released the CompileJob's directory is deleted. Any directories in the Game folder not in use are also deleted when the Instance starts.

Chat Bot System

The chat system is relatively simple. The Tgstation.Server.Host.Components.Chat.IChat manager creates Tgstation.Server.Host.Components.Chat.Providers.IProvider objects which do the IRC/Discord/etc messaging.

The relationship between providers and the manager is a bit messy at the time of this writing (returned (im)mutable classes that map ids, to ids, to ids...) but it works.

The few built in chat commands query the necessary components to get their results. Custom chat commands are routed to the watchdog's active server and then the response is relayed back. The message information passed to DM code is documented in the tgs_chat_user datum in the DMAPI: https://github.com/tgstation/tgstation-server/blob/master/src/DMAPI/tgs.dm#L117

Static File Management

This is largely just a remote file explorer. Tgstation.Server.Host.Controllers send requests to Tgstation.Server.Host.Components.StaticFiles.IConfiguration with an optional Tgstation.Server.Host.Security.ISystemIdentity . If the identity is present Tgstation.Server.Host.Security.ISystemIdentity.RunImpersonated is used to do the reading/writing, otherwise it is done as normal.

The Tgstation.Server.Host.Components.StaticFiles.IConfiguration object is also responsible for things like generating .dme modifications, symlinking static files during deployment, and running hook scripts.

Watchdog

This is the core of tgstation-server, the component that starts, monitors, and updates DreamDaemon.

At it's core, the watchdog operates using a hot/cold server setup. At any given moment there are two DreamDaemon instances running, only one of which players can see. If anything bad happens to that server, it is killed and the inactive server has its port changed to catch all the connections. If any changes need to be made to the configuration (port, security, compile job), the inactive server is killed and immediately relaunched with the new configuration. Whenever the active server reboots, the two servers change ports so as to minimize load times.

General chat messages and verbose logs can be used to track watchdog state.

That's a high level view of things, now let's get to the nitty gritty.

Launch

First the most recent Tgstation.Server.Host.Components.Deployment.IDmbProvider is retrieved from the Tgstation.Server.Host.Components.Deployment.IDmbFactory twice, adding 2 locks.

This is used to launch a Tgstation.Server.Host.Components.Watchdog.ISessionController via the watchdog's Tgstation.Server.Host.Components.Watchdog.ISessionControllerFactory in the A directory of dmb providers Tgstation.Server.Host.Models.CompileJob . This will be designated the Alpha server.

Whenever DreamDaemon is launched by any part of the watchdog, we try to elevate its process priority to the equivalent of Windows' Above Normal

10 seconds are allowed to pass, then the Bravo server is launched via the same method except in the B directory. We then wait for both servers to finish their initial startup lag and then designate Alpha as the Active server and pass it to the monitor. The active server will be told to close it's port on reboot

If the watchdog ever enters a failure state it can't recover from, it kills both servers and reruns this process to restart.

The Monitor

The monitor is responsible for handling every Tgstation.Server.Host.Components.Watchdog.MonitorActivationReason . It sleeps until one of these things happen. If multiple things happen at once, they are processed in their order of declaration.

The monitor maintains a Tgstation.Server.Host.Components.Watchdog.MonitorState which helps it make descisions on how to handle activation reasons. The Tgstation.Server.Host.Components.Watchdog.MonitorState.NextAction determines how multiple simultaneous events are handled in succession.

Active Server Crashed/Exited

If there was a graceful shutdown scheduled, exit the watchdog

Otherwise, if the inactive server has critfailed or is still booting, restart the watchdog

Otherwise, try to set the inactive server's port to the active server port and swap their designations. Failing that, restart the watchdog.

Otherwise, attempt to reboot the once active now inactive server with the latest settings, failing that, mark it as critfailed.

Stop processing further activation reasons

Inactive Server Crashed/Exited

Attempt to reboot the inactive server with the latest settings, failing that, mark it as critfailed.

Active Server Rebooted

Generally, at this point, the active server's port has been closed (unless it isn't for some reason) by the DMAPI

If there was a graceful shutdown scheduled, exit the watchdog

Otherwise, if the inactive server has critfailed or is still booting, restart the watchdog

Otherwise, if the active server needs a new DMB, a graceful restart, or settings update, kill the active server

If the port isn't closed, exit this activation reason. We wanted to keep it open for a reason.

Try to set the inactive server's port to the active server port and swap their designations. Failing that, restart the watchdog.

Set the current active server to close it's port on reboot.

If we didnt kill the now inactive server and got here set it to NOT close it's port on reboot and try and set it's port to be the internal game port (The DMAPI and SessionController have a method for communicating a new port to open on even if it's closed)

Failing the above case, or if we killed the now inactive server attempt to reboot it with the latest settings and stop processing other activation reasons, failing that, mark it as critfailed

Otherwise, skip processing the Tgstation.Server.Host.Components.Watchdog.onitorActivationReason.InactiveServerRebooted

Inactive Server Rebooted

Mark the inactive server as rebooting, tell it to NOT close it's port on reboot.

Inactive Server Rebooted

Mark the inactive server as ready, tell it to close it's port on reboot.

New Dmb Available or Launch Settings Changed

Attempt to reboot the inactive server with the latest settings, failing that, mark it as critfailed

Reattaching

When TGS reboots for an update or via admin command it keeps the child DreamDaemon processes alive and saves state information to regain control of them when it comes back online. The saved state is entered as the Tgstation.Server.Host.Models.WatchdogReattachInformation for the instance in the database.

When the instance comes online again it will automatically attempt to reattach if said information is present. The information is deleted as soon as it is loaded.

If both servers reattached, the montor starts

If the active server failed to reattach, the watchdog is restarted.

If only the inactive server failed to reattach, it is mocked with a Tgstation.Server.Host.Components.Watchdog.DeadSessionController. Which will immediately trigger Tgstation.Server.Host.Components.Watchdog.MonitorActivationReason.InactiveServerCrashed . Then the monitor starts

Communication

TGS => DM communication is achieved by sending packets which invoke /world/Topic() and DM can respond to those in kind. Tgstation.Server.Host.Components.EventType and other command messages are communicated to the active server via this method.

DM => TGS communication was initially meant to use world.Export() to access a controller and route the request to the specific instance. Due an issue with inherited handles (https://github.com/tgstation/tgstation-server/issues/71) that was causing problems but went undiagnosed for a long time, this idea was scrapped. It will soon return to replace the current implementation, however (https://github.com/tgstation/tgstation-server/issues/668).

DM => TGS communication currently works like so:

- DM writes json to a specific file given in the initial launch json then enters a timeout sleep loop

- The Host watches for and reads the json

- The command is processed via the Tgstation.Server.Host.Components.Interop.ICommHandler for the DreamDaemon instance

- The response is sent as a topic

- DM writes the topic response into a variable

- The sleep loop breaks when it reads this variable and returns the result

Communication is necessary for the watchdog to flow things smoothly from a game client perspective. But realistically, due to how it works, the only absolutely required message is server reboots so it is known when to apply updates.

Host Update Process

There are actually two processes involved in a proper TGS setup. The actual Tgstation.Server.Host .NET core application and the component that runs the Tgstation.Server.Host.Watchdog (at this time, either Tgstation.Server.Host.Service or Tgstation.Server.Host.Console). The directory structure of the setup is like so:

/app_directory

- /lib

- /Default

- Initial Tgstation.Server.Host executable files

- Space for updates to be installed

- /Default

- Host.Watchdog executable files

The Host.Watchdog launches the Host with a designated path in the /lib folder. When the Host process wants to update, it extracts the new Host package to this directory and exits with code 1. The watchdog then attempts to rename the current /lib/Default directory to something unique, rename the update directory to /lib/Default and the launch the new Host process.

The Host.Watchdog serves an additional purpose of automatically restarting the the Host in the case of a fatal crash (which should never happen, but the additional layer of safety is nice).